Intel Launches 4th Gen Xeon Scalable Processors, Max Series CPUs

Intel high┬Łlights broad indus┬Łtry adop┬Łti┬Łon across all major CSPs, OEMs, ODMs and ISVs, and show┬Łca┬Łses increased per┬Łfor┬Łmance in AI, net┬Łwor┬Łking and high per┬Łfor┬Łmance computing.

NEWS HIGHLIGHTS

- Expan┬Łsi┬Łve cus┬Łto┬Łmer and part┬Łner adop┬Łti┬Łon from AWS, Cis┬Łco, Clou┬Łde┬Łra, Core┬ŁWea┬Łve, Dell Tech┬Łno┬Łlo┬Łgies, Drop┬Łbox, Erics┬Łson, Fuji┬Łtsu, Goog┬Łle Cloud, Hew┬Łlett Packard Enter┬Łpri┬Łse, IBM Cloud, Inspur Infor┬Łma┬Łti┬Łon, IONOS, Leno┬Łvo, Los Ala┬Łmos Natio┬Łnal Labo┬Łra┬Łto┬Łry, Micro┬Łsoft Azu┬Łre, NVIDIA, Ora┬Łcle Cloud, OVH┬Łcloud, phoe┬Łnix┬ŁNAP, Red┬ŁHat, SAP, Super┬ŁMi┬Łcro, Tele┬Łfo┬Łni┬Łca and VMware, among others.

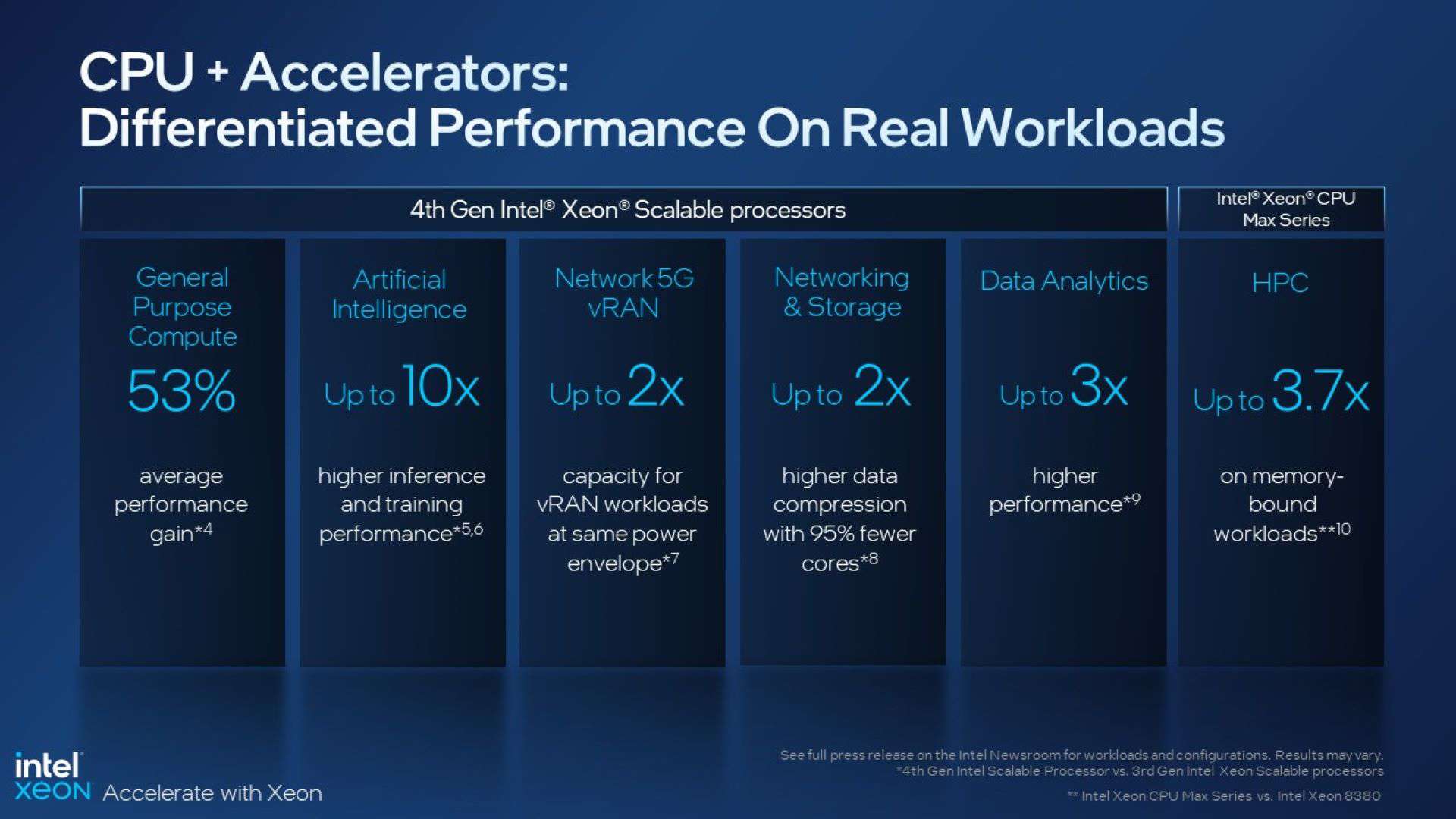

- With the most built-in acce┬Łle┬Łra┬Łtors of any CPU in the world for key workloads such as AI, ana┬Łly┬Łtics, net┬Łwor┬Łking, secu┬Łri┬Łty, sto┬Łrage and high per┬Łfor┬Łmance com┬Łpu┬Łting (HPC), 4th Gen Intel Xeon Sca┬Łlable and Intel Max Series fami┬Łlies deli┬Łver lea┬Łder┬Łship per┬Łfor┬Łmance in a pur┬Łpo┬Łse-built workload-first approach.

- 4th Gen Intel Xeon Sca┬Łlable pro┬Łces┬Łsors are IntelŌĆÖs most sus┬Łtainable data cen┬Łter pro┬Łces┬Łsors, deli┬Łve┬Łring a ran┬Łge of fea┬Łtures for opti┬Łmi┬Łzing power and per┬Łfor┬Łmance, making opti┬Łmal use of CPU resour┬Łces to help achie┬Łve cus┬Łto┬ŁmersŌĆÖ sus┬Łtaina┬Łbi┬Łli┬Łty goals.

- When com┬Łpared with pri┬Łor gene┬Łra┬Łti┬Łons, 4th Gen Xeon cus┬Łto┬Łmers can expect a 2.9x1 avera┬Łge per┬Łfor┬Łmance per watt effi┬Łci┬Łen┬Łcy impro┬Łve┬Łment for tar┬Łge┬Łted workloads when uti┬Łli┬Łzing built-in acce┬Łle┬Łra┬Łtors, up to 70-watt2 power savings per CPU in opti┬Łmi┬Łzed power mode with mini┬Łmal per┬Łfor┬Łmance loss for sel┬Łect workloads and a 52% to 66% lower total cost of owner┬Łship (TCO)3.

SANTA CLARA, Calif., Jan. 10, 2023 ŌĆō Intel today mark┬Łed one of the most important pro┬Łduct laun┬Łches in com┬Łpa┬Łny histo┬Łry with the unvei┬Łling of 4th Gen Intel┬« Xeon┬« Sca┬Łlable pro┬Łces┬Łsors (code-named Sap┬Łphi┬Łre Rapids), the Intel┬« Xeon┬« CPU Max Series (code-named Sap┬Łphi┬Łre Rapids HBM) and the Intel┬« Data Cen┬Łter GPU Max Series (code-named Pon┬Łte Vec┬Łchio), deli┬Łve┬Łring for its cus┬Łto┬Łmers a leap in data cen┬Łter per┬Łfor┬Łmance, effi┬Łci┬Łen┬Łcy, secu┬Łri┬Łty and new capa┬Łbi┬Łli┬Łties for AI, the cloud, the net┬Łwork and edge, and the worldŌĆÖs most powerful supercomputers.

Working along┬Łside its cus┬Łto┬Łmers and part┬Łners with 4th Gen Xeon, Intel is deli┬Łve┬Łring dif┬Łfe┬Łren┬Łtia┬Łted solu┬Łti┬Łons and sys┬Łtems at sca┬Łle to tack┬Łle their big┬Łgest com┬Łpu┬Łting chal┬Łlenges. IntelŌĆÖs uni┬Łque approach to pro┬Łvi┬Łding pur┬Łpo┬Łse-built, workload-first acce┬Łle┬Łra┬Łti┬Łon and high┬Łly opti┬Łmi┬Łzed soft┬Łware tun┬Łed for spe┬Łci┬Łfic workloads enables the com┬Łpa┬Łny to deli┬Łver the right per┬Łfor┬Łmance at the right power for opti┬Łmal over┬Łall total cost of ownership.

Press Kit: 4th Gen Xeon Sca┬Łlable Processors

Addi┬Łtio┬Łnal┬Łly, as IntelŌĆÖs most sus┬Łtainable data cen┬Łter pro┬Łces┬Łsors, 4th Gen Xeon pro┬Łces┬Łsors deli┬Łver cus┬Łto┬Łmers a ran┬Łge of fea┬Łtures for mana┬Łging power and per┬Łfor┬Łmance, making the opti┬Łmal use of CPU resour┬Łces to help achie┬Łve their sus┬Łtaina┬Łbi┬Łli┬Łty goals.

ŌĆ£The launch of 4th Gen Xeon Sca┬Łlable pro┬Łces┬Łsors and the Max Series pro┬Łduct fami┬Łly is a pivo┬Łtal moment in fue┬Łling IntelŌĆÖs tur┬Łn┬Łaround, reig┬Łni┬Łting our path to lea┬Łder┬Łship in the data cen┬Łter and gro┬Łwing our foot┬Łprint in new are┬Łnas,ŌĆØ said San┬Łdra Rive┬Łra, Intel exe┬Łcu┬Łti┬Łve vice pre┬Łsi┬Łdent and gene┬Łral mana┬Łger of the Data Cen┬Łter and AI Group. ŌĆ£IntelŌĆÖs 4th Gen Xeon and the Max Series pro┬Łduct fami┬Łly deli┬Łver what cus┬Łto┬Łmers tru┬Łly want ŌĆō lea┬Łder┬Łship per┬Łfor┬Łmance and relia┬Łbi┬Łli┬Łty within a secu┬Łre envi┬Łron┬Łment for their real-world requi┬Łre┬Łments ŌĆō dri┬Łving fas┬Łter time to value and powe┬Łring their pace of innovation.ŌĆØ

Unli┬Łke any other data cen┬Łter pro┬Łces┬Łsor on the mar┬Łket and alre┬Ła┬Łdy in the hands of cus┬Łto┬Łmers today, the 4th Gen Xeon fami┬Łly great┬Łly expands on IntelŌĆÖs pur┬Łpo┬Łse-built, workload-first stra┬Łtegy and approach.

Lea┬Łding Per┬Łfor┬Łmance and Sus┬Łtaina┬Łbi┬Łli┬Łty Bene┬Łfits with the Most Built-In Acceleration

Today, the┬Łre are over 100 mil┬Łli┬Łon Xeons instal┬Łled in the mar┬Łket ŌĆō from on-prem ser┬Łvers run┬Łning IT ser┬Łvices, inclu┬Łding new as-a-ser┬Łvice busi┬Łness models, to net┬Łwor┬Łking equip┬Łment mana┬Łging Inter┬Łnet traf┬Łfic, to wire┬Łless base sta┬Łti┬Łon com┬Łpu┬Łting at the edge, to cloud services.

Buil┬Łding on deca┬Łdes of data cen┬Łter, net┬Łwork and intel┬Łli┬Łgent edge inno┬Łva┬Łti┬Łon and lea┬Łder┬Łship, new 4th Gen Xeon pro┬Łces┬Łsors deli┬Łver lea┬Łding per┬Łfor┬Łmance with the most built-in acce┬Łle┬Łra┬Łtors of any CPU in the world to tack┬Łle cus┬Łto┬ŁmersŌĆÖ most important com┬Łpu┬Łting chal┬Łlenges across AI, ana┬Łly┬Łtics, net┬Łwor┬Łking, secu┬Łri┬Łty, sto┬Łrage and HPC.

When com┬Łpa┬Łring with pri┬Łor gene┬Łra┬Łti┬Łons, 4th Gen Intel Xeon cus┬Łto┬Łmers can expect a 2.9x1 avera┬Łge per┬Łfor┬Łmance per watt effi┬Łci┬Łen┬Łcy impro┬Łve┬Łment for tar┬Łge┬Łted workloads when uti┬Łli┬Łzing built-in acce┬Łle┬Łra┬Łtors, up to 70-watt2 power savings per CPU in opti┬Łmi┬Łzed power mode with mini┬Łmal per┬Łfor┬Łmance loss, and a 52% to 66% lower TCO3.

Sus┬Łtaina┬Łbi┬Łli┬Łty

The expan┬Łsi┬Łve┬Łness of built-in acce┬Łle┬Łra┬Łtors included in 4th Gen Xeon means Intel deli┬Łvers plat┬Łform-level power savings, les┬Łsening the need for addi┬Łtio┬Łnal dis┬Łcrete acce┬Łle┬Łra┬Łti┬Łon and hel┬Łping our cus┬Łto┬Łmers achie┬Łve their sus┬Łtaina┬Łbi┬Łli┬Łty goals. Addi┬Łtio┬Łnal┬Łly, the new Opti┬Łmi┬Łzed Power Mode can deli┬Łver up to 20% socket power savings with a less than 5% per┬Łfor┬Łmance impact for sel┬Łec┬Łted workloads11. New inno┬Łva┬Łtions in air and liquid coo┬Łling redu┬Łce total data cen┬Łter ener┬Łgy con┬Łsump┬Łti┬Łon fur┬Łther; and for the manu┬Łfac┬Łtu┬Łring of 4th Gen Xeon, itŌĆÖs been built with 90% or more rene┬Łwa┬Łble elec┬Łtri┬Łci┬Łty at Intel sites with sta┬Łte-of-the-art water recla┬Łma┬Łti┬Łon facilities.

Arti┬Łfi┬Łci┬Łal Intelligence

In AI, and com┬Łpared to pre┬Łvious gene┬Łra┬Łti┬Łon, 4th Gen Xeon pro┬Łces┬Łsors achie┬Łve up to 10x5,6 hig┬Łher PyTorch real-time infe┬Łrence and trai┬Łning per┬Łfor┬Łmance with built-in Intel┬« Advan┬Łced Matrix Exten┬Łsi┬Łon (Intel┬« AMX) acce┬Łle┬Łra┬Łtors. IntelŌĆÖs 4th Gen Xeon unlocks new levels of per┬Łfor┬Łmance for infe┬Łrence and trai┬Łning across a wide breadth of AI workloads. The Xeon CPU Max Series expands on the┬Łse capa┬Łbi┬Łli┬Łties for natu┬Łral lan┬Łguage pro┬Łces┬Łsing, with cus┬Łto┬Łmers see┬Łing up to a 20x12 speed-up on lar┬Łge lan┬Łguage models. With the deli┬Łvery of IntelŌĆÖs AI soft┬Łware suite, deve┬Łlo┬Łpers can use their AI tool of choice, while incre┬Łasing pro┬Łduc┬Łti┬Łvi┬Łty and spee┬Łding time to AI deve┬Łlo┬Łp┬Łment. The suite is por┬Łta┬Łble from the work┬Łsta┬Łtion, enab┬Łling it to sca┬Łle out in the cloud and all the way out to the edge. And it has been vali┬Łda┬Łted with over 400 machi┬Łne lear┬Łning and deep lear┬Łning AI models across the most com┬Łmon AI uses cases in every busi┬Łness segment.

Net┬Łwor┬Łking

4th Gen Xeon offers a fami┬Łly of pro┬Łces┬Łsors spe┬Łci┬Łfi┬Łcal┬Łly opti┬Łmi┬Łzed for high-per┬Łfor┬Łmance, low-laten┬Łcy net┬Łwork and edge workloads. The┬Łse pro┬Łces┬Łsors are a cri┬Łti┬Łcal part of the foun┬Łda┬Łti┬Łon dri┬Łving a more soft┬Łware-defi┬Łned future for indus┬Łtries ran┬Łging from tele┬Łcom┬Łmu┬Łni┬Łca┬Łti┬Łons and retail to manu┬Łfac┬Łtu┬Łring and smart cities. For 5G core workloads, built-in acce┬Łle┬Łra┬Łtors help increase through┬Łput and decrease laten┬Łcy, while advan┬Łces in power manage┬Łment enhan┬Łce both the respon┬Łsi┬Łve┬Łness and the effi┬Łci┬Łen┬Łcy of the plat┬Łform. And, when com┬Łpared to pre┬Łvious gene┬Łra┬Łti┬Łons, 4th Gen Xeon deli┬Łvers up to twice the vir┬Łtua┬Łli┬Łzed radio access net┬Łwork (vRAN) capa┬Łci┬Łty wit┬Łhout incre┬Łasing power con┬Łsump┬Łti┬Łon. This enables com┬Łmu┬Łni┬Łca┬Łti┬Łons ser┬Łvice pro┬Łvi┬Łders to dou┬Łble the per┬Łfor┬Łmance-per-watt to meet their cri┬Łti┬Łcal per┬Łfor┬Łmance, sca┬Łling and ener┬Łgy effi┬Łci┬Łen┬Łcy needs.

High Per┬Łfor┬Łmance Computing

4th Gen Xeon and the Intel Max Series pro┬Łduct fami┬Łly bring a sca┬Łlable, balan┬Łced archi┬Łtec┬Łtu┬Łre that inte┬Łgra┬Łtes CPU and GPU with oneAPIŌĆÖs open soft┬Łware eco┬Łsys┬Łtem for deman┬Łding com┬Łpu┬Łting workloads in HPC and AI, sol┬Łving the worldŌĆÖs most chal┬Łlen┬Łging problems.

The Xeon CPU Max Series is the first and only x86-based pro┬Łces┬Łsor with high band┬Łwidth memo┬Łry, acce┬Łle┬Łra┬Łting many HPC workloads wit┬Łhout the need for code chan┬Łges. The Intel Data Cen┬Łter GPU Max Series is IntelŌĆÖs hig┬Łhest-den┬Łsi┬Łty pro┬Łces┬Łsor and will be available in seve┬Łral form fac┬Łtors that address dif┬Łfe┬Łrent cus┬Łto┬Łmer needs.

The Xeon CPU Max Series offers 64 giga┬Łbytes of high band┬Łwidth memo┬Łry (HBM2e) on the packa┬Łge, signi┬Łfi┬Łcant┬Łly incre┬Łasing data through┬Łput for HPC and AI workloads. Com┬Łpared with top-end 3rd Gen Intel┬« Xeon┬« Sca┬Łlable pro┬Łces┬Łsors, the Xeon CPU Max Series pro┬Łvi┬Łdes up to 3.7 times10 more per┬Łfor┬Łmance on a ran┬Łge of real-world appli┬Łca┬Łti┬Łons like ener┬Łgy and earth sys┬Łtems modeling.

Fur┬Łther, the Data Cen┬Łter GPU Max Series packs over 100 bil┬Łli┬Łon tran┬Łsis┬Łtors into a 47-tile packa┬Łge, brin┬Łging new levels of through┬Łput to chal┬Łlen┬Łging workloads like phy┬Łsics, finan┬Łcial ser┬Łvices and life sci┬Łen┬Łces. When pai┬Łred with the Xeon CPU Max Series, the com┬Łbi┬Łned plat┬Łform achie┬Łves up to 12.8 times13 grea┬Łter per┬Łfor┬Łmance than the pri┬Łor gene┬Łra┬Łti┬Łon when run┬Łning the LAMMPS mole┬Łcu┬Łlar dyna┬Łmics simulator.

Most Fea┬Łture-Rich and Secu┬Łre Xeon Plat┬Łform Yet

Signi┬Łfy┬Łing the big┬Łgest plat┬Łform trans┬Łfor┬Łma┬Łti┬Łon Intel has deli┬Łver┬Łed, not only is 4th Gen Xeon a mar┬Łvel of acce┬Łle┬Łra┬Łti┬Łon, but it is also an achie┬Łve┬Łment in manu┬Łfac┬Łtu┬Łring, com┬Łbi┬Łning up to four Intel 7ŌĆæbuilt tiles on a sin┬Łgle packa┬Łge, con┬Łnec┬Łted using Intel EMIB (embedded mul┬Łti-die inter┬Łcon┬Łnect bridge) pack┬Ła┬Łging tech┬Łno┬Łlo┬Łgy and deli┬Łve┬Łring new fea┬Łtures inclu┬Łding increased memo┬Łry band┬Łwidth with DDR5, increased I/O band┬Łwidth with PCIe5.0 and Com┬Łpu┬Łte Express Link (CXL) 1.1 interconnect.

At the foun┬Łda┬Łti┬Łon of it all is secu┬Łri┬Łty. With 4th Gen Xeon, Intel is deli┬Łve┬Łring the most com┬Łpre┬Łhen┬Łsi┬Łve con┬Łfi┬Łden┬Łti┬Łal com┬Łpu┬Łting port┬Łfo┬Łlio of any data cen┬Łter sili┬Łcon pro┬Łvi┬Łder in the indus┬Łtry, enhan┬Łcing data secu┬Łri┬Łty, regu┬Łla┬Łto┬Łry com┬Łpli┬Łance and data sove┬Łreig┬Łn┬Łty. Intel remains the only sili┬Łcon pro┬Łvi┬Łder to offer appli┬Łca┬Łti┬Łon iso┬Łla┬Łti┬Łon for data cen┬Łter com┬Łpu┬Łting with Intel┬« Soft┬Łware Guard Exten┬Łsi┬Łons (Intel┬« SGX), which pro┬Łvi┬Łdes todayŌĆÖs smal┬Łlest attack sur┬Łface for con┬Łfi┬Łden┬Łti┬Łal com┬Łpu┬Łting in pri┬Łva┬Łte, public and cloud-to-edge envi┬Łron┬Łments. Addi┬Łtio┬Łnal┬Łly, IntelŌĆÖs new vir┬Łtu┬Łal-machi┬Łne (VM) iso┬Łla┬Łti┬Łon tech┬Łno┬Łlo┬Łgy, Intel┬« Trust Domain Exten┬Łsi┬Łons (Intel┬« TDX), is ide┬Łal for port┬Łing exis┬Łting appli┬Łca┬Łti┬Łons into a con┬Łfi┬Łden┬Łti┬Łal envi┬Łron┬Łment and will debut with Micro┬Łsoft Azu┬Łre, Ali┬Łbaba Cloud, Goog┬Łle Cloud and IBM Cloud.

Final┬Łly, the modu┬Łlar archi┬Łtec┬Łtu┬Łre of 4th Gen Xeon allows Intel to offer a wide ran┬Łge of pro┬Łces┬Łsors across near┬Łly 50 tar┬Łge┬Łted SKUs for cus┬Łto┬Łmer use cases or appli┬Łca┬Łti┬Łons, from main┬Łstream gene┬Łral-pur┬Łpo┬Łse SKUs to pur┬Łpo┬Łse-built SKUs for cloud, data┬Łba┬Łse and ana┬Łly┬Łtics, net┬Łwor┬Łking, sto┬Łrage, and sin┬Łgle-socket edge use cases. The 4th Gen Xeon pro┬Łces┬Łsor fami┬Łly is On Demand-capa┬Łble and varies in core count, fre┬Łquen┬Łcy, mix of acce┬Łle┬Łra┬Łtors, power enve┬Łlo┬Łpe and memo┬Łry through┬Łput as is appro┬Łpria┬Łte for tar┬Łget use cases and form fac┬Łtors addres┬Łsing cus┬Łto┬ŁmersŌĆÖ real-world requirements.

SKU TABLE: SKUs for 4th Gen Xeon and Intel Xeon CPU Max Series

┬╣ Geo┬Łme┬Łan of fol┬Łlo┬Łwing workloads: Rock┬ŁsDB (IAA vs ZTD), Click┬ŁHouse (IAA vs ZTD), SPDK lar┬Łge media and data┬Łba┬Łse request pro┬Łxies (DSA vs out of box), Image Clas┬Łsi┬Łfi┬Łca┬Łti┬Łon Res┬ŁNet-50 (AMX vs VNNI), Object Detec┬Łtion SSD-Res┬ŁNet-34 (AMX vs VNNI), QAT┬Łzip (QAT vs zlib)

┬▓ 1ŌĆænode, Intel Refe┬Łrence Vali┬Łda┬Łti┬Łon Plat┬Łform, 2x Intel┬« Xeon 8480+ (56C, 2GHz, 350W TDP), HT On, Tur┬Łbo ON, Total Memo┬Łry: 1 TB (16 slots/ 64GB/ 4800 MHz), 1x P4510 3.84TB NVMe PCIe Gen4 dri┬Łve, BIOS: 0091.D05, (ucode:0x2b0000c0), Cent┬ŁOS Stream 8, 5.15.0ŌĆæspr.bkc.pc.10.4.11.x86_64, Java Perf/Watt w/ openjdk-11+28_linux-x64_bin, 112 ins┬Łtances, 1550MB Initial/Max heap size, Tes┬Łted by Intel as of Oct 2022.

┬│ ResNet50 Image Classification

New Con┬Łfi┬Łgu┬Łra┬Łti┬Łon: 1ŌĆænode, 2x pre-pro┬Łduc┬Łtion 4th Gen Intel┬« Xeon┬« Sca┬Łlable 8490H pro┬Łces┬Łsor (60 core) with Intel┬« Advan┬Łced Matrix Exten┬Łsi┬Łons (Intel AMX), on pre-pro┬Łduc┬Łtion Super┬ŁMi┬Łcro SYS-221H-TNR with 1024GB DDR5 memo┬Łry (16x64 GB), micro┬Łcode 0x2b0000c0, HT On, Tur┬Łbo On, SNC Off, Cent┬ŁOS Stream 8, 5.19.16ŌĆō301.fc37.x86_64, 1x3.84TB P5510 NVMe, 10GbE x540-AT2, Intel TF 2.10, AI Model=Resnet 50 v1_5, best scores achie┬Łved: BS1 AMX 1 core/instance (max. 15ms SLA), using phy┬Łsi┬Łcal cores, tes┬Łted by Intel Novem┬Łber 2022. Base┬Łline: 1ŌĆænode, 2x pro┬Łduc┬Łtion 3rd Gen Intel Xeon Sca┬Łlable 8380 Pro┬Łces┬Łsor ( 40 cores) on Super┬ŁMi┬Łcro SYS-220U-TNR , DDR4 memo┬Łry total 1024GB (16x64 GB), micro┬Łcode 0xd000375, HT On, Tur┬Łbo On, SNC Off, Cent┬ŁOS Stream 8, 5.19.16ŌĆō301.fc37.x86_64, 1x3.84TB P5510 NVMe, 10GbE x540-AT2, Intel TF 2.10, AI Model=Resnet 50 v1_5, best scores achie┬Łved: BS1 INT8 2 cores/instance (max. 15ms SLA), using phy┬Łsi┬Łcal cores, tes┬Łted by Intel Novem┬Łber 2022.

For a 50 ser┬Łver fleet of 3rd Gen Xeon 8380 (RN50 w/DLBoost), esti┬Łma┬Łted as of Novem┬Łber 2022:

CapEx cos┬Łts: $1.64MŌĆŗ

OpEx cos┬Łts (4 year, includes power and coo┬Łling uti┬Łli┬Łty cos┬Łts, infra┬Łstruc┬Łtu┬Łre and hard┬Łware main┬Łten┬Łan┬Łce cos┬Łts): $739.9KŌĆŗ

Ener┬Łgy use in kWh (4 year, per ser┬Łver): 44627, PUE 1.6ŌĆŗ

Other assump┬Łti┬Łons: uti┬Łli┬Łty cost $0.1/kWh, kWh to kg CO2 fac┬Łtor 0.42394

For a 17 ser┬Łver fleet of 4th Gen Xeon 8490H (RN50 w/AMX), esti┬Łma┬Łted as of Novem┬Łber 2022:

CapEx cos┬Łts: $799.4KŌĆŗ

OpEx cos┬Łts (4 year, includes power and coo┬Łling uti┬Łli┬Łty cos┬Łts, infra┬Łstruc┬Łtu┬Łre and hard┬Łware main┬Łten┬Łan┬Łce cos┬Łts): $275.3KŌĆŗ

Ener┬Łgy use in kWh (4 year, per ser┬Łver): 58581, PUE 1.6

AI ŌĆö 55% lower TCO by deploy┬Łing fewer 4th Gen Intel┬« Xeon┬« pro┬Łces┬Łsor-based ser┬Łvers to meet the same per┬Łfor┬Łmance requi┬Łre┬Łment. See [E7] at intel.com/processorclaims: 4th Gen Intel Xeon Sca┬Łlable pro┬Łces┬Łsors. Results may vary.

Data┬Łba┬Łse ŌĆö 52% lower TCO by deploy┬Łing fewer 4th Gen Intel┬« Xeon┬« pro┬Łces┬Łsor-based ser┬Łvers to meet the same per┬Łfor┬Łmance requi┬Łre┬Łment. See [E8] at intel.com/processorclaims: 4th Gen Intel Xeon Sca┬Łlable pro┬Łces┬Łsors. Results may vary.

HPC ŌĆö 66% lower TCO by deploy┬Łing fewer Intel┬« Xeon┬« CPU Max pro┬Łces┬Łsor-based ser┬Łvers to meet the same per┬Łfor┬Łmance requi┬Łre┬Łment. See [E9] at intel.com/processorclaims: 4th Gen Intel Xeon Sca┬Łlable pro┬Łces┬Łsors. Results may vary.

4 Geo┬Łme┬Łan of HP Lin┬Łpack, Stream Tri┬Ład, SPECrate2017_fp_base est, SPECrate2017_int_base est. See [G2, G4, G6] at intel.com/processorclaims: 4th Gen Intel Xeon Scalable.

5 Up to 10x hig┬Łher PyTorch real-time infe┬Łrence per┬Łfor┬Łmance with built-in Intel┬« Advan┬Łced Matrix Exten┬Łsi┬Łons (Intel┬« AMX) (BF16) vs. the pri┬Łor gene┬Łra┬Łti┬Łon (FP32)ŌĆŗ

PyTorch geo┬Łme┬Łan of ResNet50, Bert-Lar┬Łge, Mas┬ŁkRCNN, SSD-Res┬ŁNe┬Łt34, RNNŌĆæT, Resnext101.

6 Up to 10x hig┬Łher PyTorch trai┬Łning per┬Łfor┬Łmance with built-in Intel┬« Advan┬Łced Matrix Exten┬Łsi┬Łons (Intel┬« AMX) (BF16) vs. the pri┬Łor gene┬Łra┬Łti┬Łon (FP32)ŌĆŗ

PyTorch geo┬Łme┬Łan of ResNet50, Bert-Lar┬Łge, DLRM, Mas┬ŁkRCNN, SSD-Res┬ŁNe┬Łt34, RNNŌĆæT.ŌĆŗ

7 Esti┬Łma┬Łted as of 8/30/2022 based on 4th gene┬Łra┬Łti┬Łon Intel┬« Xeon┬« Sca┬Łlable pro┬Łces┬Łsor archi┬Łtec┬Łtu┬Łre impro┬Łve┬Łments vs 3rd gene┬Łra┬Łti┬Łon Intel┬« Xeon┬« Sca┬Łlable pro┬Łces┬Łsor at simi┬Łlar core count, socket power and fre┬Łquen┬Łcy on a test sce┬Łna┬Łrio using Flex┬ŁRANŌäó soft┬Łware. Results may vary.

8 Up to 95% fewer cores and 2x hig┬Łher level 1 com┬Łpres┬Łsi┬Łon through┬Łput with 4th Gen Intel Xeon Pla┬Łti┬Łnum 8490H using inte┬Łgra┬Łted Intel QAT vs. pri┬Łor generation.

8490H: 1ŌĆænode, pre-pro┬Łduc┬Łtion plat┬Łform with 2x 4th Gen Intel┬« Xeon Sca┬Łlable Pro┬Łces┬Łsor (60 core) with inte┬Łgra┬Łted Intel Quick┬ŁAs┬Łsist Acce┬Łle┬Łra┬Łtor (Intel QAT), QAT device utilized=8(2 sockets acti┬Łve), with Total 1024GB (16x64 GB) DDR5 memo┬Łry, micro┬Łcode 0xf000380, HT On, Tur┬Łbo Off, SNC Off, Ubun┬Łtu 22.04.1 LTS, 5.15.0ŌĆō47-generic, 1x 1.92TB Intel┬« SSDSC2KG01, QAT v20.l.0.9.1 , QAT┬Łzip v1.0.9 , ISAŌĆæL v2.3.0, tes┬Łted by Intel Sep┬Łtem┬Łber 2022.

8380: 1ŌĆænode, 2x 3rd Gen Intel Xeon Sca┬Łlable Pro┬Łces┬Łsors( 40 cores) on Coyo┬Łte Pass plat┬Łform, DDR4 memo┬Łry total 1024GB (16x64 GB), micro┬Łcode 0xd000375, HT On, Tur┬Łbo Off, SNC Off, Ubun┬Łtu 22.04.1 LTS, 5.15.0ŌĆō47-generic, 1x 1.92TB Intel SSDSC2KG01,QAT v1.7.l.4.16, QAT┬Łzip v1.0.9 , ISAŌĆæL v2.3.0, tes┬Łted by Intel Octo┬Łber 2022.

9 Up to 3x hig┬Łher Rock┬ŁsDB per┬Łfor┬Łmance with 4th Gen Intel Xeon Pla┬Łti┬Łnum 8490H using inte┬Łgra┬Łted Intel IAA vs. pri┬Łor generation.

8490H: 1ŌĆænode, pre-pro┬Łduc┬Łtion Intel plat┬Łform with 2x 4th Gen Intel Xeon Sca┬Łlable Pro┬Łces┬Łsor (60 cores) with inte┬Łgra┬Łted Intel In-Memo┬Łry Ana┬Łly┬Łtics Acce┬Łle┬Łra┬Łtor (Intel IAA), HT On, Tur┬Łbo On, Total Memo┬Łry 1024GB (16x64GB DDR5 4800), micro┬Łcode 0xf000380, 1x 1.92TB INTEL SSDSC2KG01, Ubun┬Łtu 22.04.1 LTS, 5.18.12ŌĆō051812-generic, QPL v0.1.21,accel-config-v3.4.6.4, ZSTD v1.5.2, Rock┬ŁsDB v6.4.6 (db_bench), tes┬Łted by Intel Sep┬Łtem┬Łber 2022.

8380: 1ŌĆænode, 2x 3rd Gen Intel Xeon Sca┬Łlable Pro┬Łces┬Łsors( 40 cores) on Coyo┬Łte Pass plat┬Łform, HT On, Tur┬Łbo On, SNC Off, Total Memo┬Łry 1024GB (16x64GB DDR4 3200), micro┬Łcode 0xd000375, 1x 1.92TB INTEL SSDSC2KG01, Ubun┬Łtu 22.04.1 LTS, 5.18.12ŌĆō051812-generic, ZSTD v1.5.2, Rock┬ŁsDB v6.4.6 (db_bench), tes┬Łted by Intel Octo┬Łber 2022.

10 Intel┬« Xeon┬« 8380: Test by Intel as of 10/7/2022. 1ŌĆænode, 2x Intel┬« Xeon┬« 8380 CPU, HT On, Tur┬Łbo On, Total Memo┬Łry 256 GB (16x16GB 3200MT/s DDR4), BIOS Ver┬Łsi┬Łon SE5C620.86B.01.01.0006.2207150335, ucode revision=0xd000375, Rocky Linux 8.6, Linux ver┬Łsi┬Łon 4.18.0ŌĆō372.26.1.el8_ŌĆŗ6.crt1.x86_ŌĆŗ64, YASK v3.05.07ŌĆŗ

Intel┬« Xeon┬« CPU Max Series: Test by Intel as of ww36ŌĆÖ22. 1ŌĆænode, 2x Intel┬« Xeon┬« CPU Max SeriesHT On, Tur┬Łbo On, SNC4, Total Memo┬Łry 128 GB (8x16GB HBM2 3200MT/s), BIOS Ver┬Łsi┬Łon SE5C7411.86B.8424.D03.2208100444, ucode revision=0x2c000020, Cent┬ŁOS Stream 8, Linux ver┬Łsi┬Łon 5.19.0ŌĆærc6.0712.intel_ŌĆŗnext.1.x86_ŌĆŗ64+server, YASK v3.05.07.

11 Up to 20% sys┬Łtem power savings uti┬Łli┬Łzing 4th Gen Xeon Sca┬Łlable with Opti┬Łmi┬Łzed Power mode on vs off on sel┬Łect workloads inclu┬Łding Spec┬ŁJBB, SPECINT and NIGNX key handshake.

12 AMD Milan: Tes┬Łted by Numen┬Łta as of 11/28/2022. 1ŌĆænode, 2x AMD EPYC 7R13 on AWS m6a.48xlarge, 768 GB DDR4-3200, Ubun┬Łtu 20.04 Ker┬Łnel 5.15, Open┬ŁVI┬ŁNO 2022.3, BERT-Lar┬Łge, Sequence Length 512, Batch Size 1

Intel┬« Xeon┬« 8480+: Tes┬Łted by Numen┬Łta as of 11/28/2022. 1ŌĆænode, 2x Intel┬« Xeon┬« 8480+, 512 GB DDR5-4800, Ubun┬Łtu 22.04 Ker┬Łnel 5.17, Open┬ŁVI┬ŁNO 2022.3, Numen┬Łta-Opti┬Łmi┬Łzed BERT-Lar┬Łge, Sequence Length 512, Batch Size 1

Intel┬« Xeon┬« Max 9468: Tes┬Łted by Numen┬Łta as of 11/30/2022. 1ŌĆænode, 2x Intel┬« Xeon┬« Max 9468, 128 GB HBM2e 3200 MT/s, Ubun┬Łtu 22.04 Ker┬Łnel 5.15, Open┬ŁVI┬ŁNO 2022.3, Numen┬Łta-Opti┬Łmi┬Łzed BERT-Lar┬Łge, Sequence Length 512, Batch Size 1

13 Intel┬« Xeon┬« 8380: Test by Intel as of 10/28/2022. 1ŌĆænode, 2x Intel┬« Xeon┬« 8380 CPU, HT On, Tur┬Łbo On, Total Memo┬Łry 256 GB (16x16GB 3200MT/s, Dual-Rank), BIOS Ver┬Łsi┬Łon SE5C6200.86B.0020.P23.2103261309, ucode revision=0xd000270, Rocky Linux 8.6, Linux ver┬Łsi┬Łon 4.18.0ŌĆō372.19.1.el8_6.crt1.x86_64ŌĆŗ

Intel┬« Xeon┬« CPU Max Series HBM: Test by Intel as of 10/28/2022. 1ŌĆænode, 2x Intel┬« Xeon┬« Max 9480, HT On, Tur┬Łbo On, Total Memo┬Łry 128 GB HBM2e, BIOS EGSDCRB1.DWR.0085.D12.2207281916, ucode 0xac000040, SUSE Linux Enter┬Łpri┬Łse Ser┬Łver 15 SP3, Ker┬Łnel 5.3.18, oneA┬ŁPI 2022.3.0ŌĆŗ

Intel┬« Data Cen┬Łter GPU Max Series with DDR Host: Test by Intel as of 10/28/2022. 1ŌĆænode, 2x Intel┬« Xeon┬« Max 9480, HT On, Tur┬Łbo On, Total Memo┬Łry 1024 GB DDR5-4800 + 128 GB HBM2e, Memo┬Łry Mode: Flat, HBM2e not used, 6x Intel┬« Data Cen┬Łter GPU Max Series, BIOS EGSDCRB1.DWR.0085.D12.2207281916, ucode 0xac000040, Aga┬Łma pvc-prq-54, SUSE Linux Enter┬Łpri┬Łse Ser┬Łver 15 SP3, Ker┬Łnel 5.3.18, oneA┬ŁPI 2022.3.0ŌĆŗ

Intel┬« Data Cen┬Łter GPU Max Series with HBM Host: Test by Intel as of 10/28/2022. 1ŌĆænode, 2x Intel┬« Xeon┬« Max 9480, HT On, Tur┬Łbo On, Total Memo┬Łry 128 GB HBM2e, 6x Intel┬« Data Cen┬Łter GPU Max Series, BIOS EGSDCRB1.DWR.0085.D12.2207281916, ucode 0xac000040, Aga┬Łma pvc-prq-54, SUSE Linux Enter┬Łpri┬Łse Ser┬Łver 15 SP3, Ker┬Łnel 5.3.18, oneA┬ŁPI 2022.3.0ŌĆŗ