NVIDIA Ampere GPUs Come to Google Cloud at Speed of Light

Just weeks after launch, NVIDIA A100 GPUs land on Goog┬Łle Com┬Łpu┬Łte Engine.

The NVIDIA A100 Ten┬Łsor Core GPU has lan┬Łded on Goog┬Łle Cloud.

Available in alpha on Goog┬Łle Com┬Łpu┬Łte Engi┬Łne just over a month after its intro┬Łduc┬Łtion, A100 has come to the cloud fas┬Łter than any NVIDIA GPU in history.

TodayŌĆÖs intro┬Łduc┬Łtion of the Acce┬Łle┬Łra┬Łtor-Opti┬Łmi┬Łzed VM (A2) ins┬Łtance fami┬Łly fea┬Łturing A100 makes Goog┬Łle the first cloud ser┬Łvice pro┬Łvi┬Łder to offer the new NVIDIA GPU.

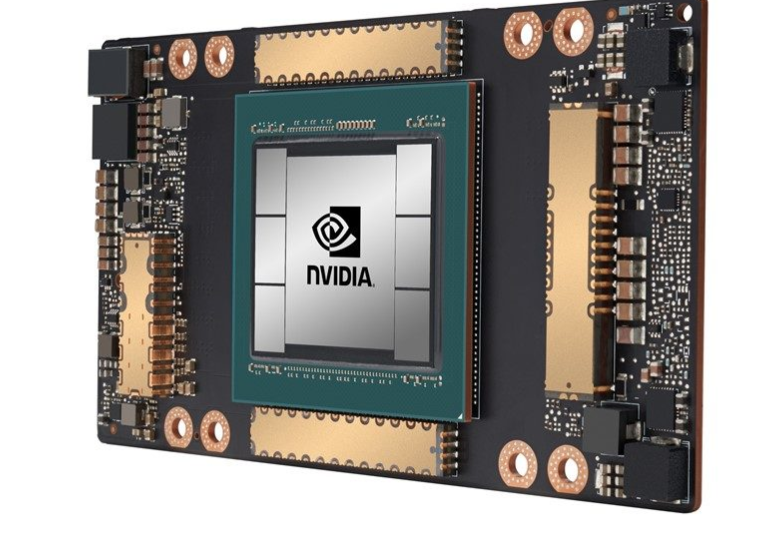

A100, which is built on the new┬Łly intro┬Łdu┬Łced NVIDIA Ampere archi┬Łtec┬Łtu┬Łre, deli┬Łvers NVIDIAŌĆÖs grea┬Łtest gene┬Łra┬Łtio┬Łnal leap ever. It boosts trai┬Łning and infe┬Łrence com┬Łpu┬Łting per┬Łfor┬Łmance by 20x over its pre┬Łde┬Łces┬Łsors, pro┬Łvi┬Łding tre┬Łmen┬Łdous spee┬Łdups for workloads to power the AI revolution.

ŌĆ£Goog┬Łle Cloud cus┬Łto┬Łmers often look to us to pro┬Łvi┬Łde the latest hard┬Łware and soft┬Łware ser┬Łvices to help them dri┬Łve inno┬Łva┬Łti┬Łon on AI and sci┬Łen┬Łti┬Łfic com┬Łpu┬Łting workloads, ŌĆØ said Manish Saina┬Łni, direc┬Łtor of Pro┬Łduct Manage┬Łment at Goog┬Łle Cloud. ŌĆ£With our new A2 VM fami┬Łly, we are proud to be the first major cloud pro┬Łvi┬Łder to mar┬Łket NVIDIA A100 GPUs, just as we were with NVIDIA T4 GPUs. We are exci┬Łted to see what our cus┬Łto┬Łmers will do with the┬Łse new capabilities.ŌĆØ

In cloud data cen┬Łters, A100 can power a broad ran┬Łge of com┬Łpu┬Łte-inten┬Łsi┬Łve appli┬Łca┬Łti┬Łons, inclu┬Łding AI trai┬Łning and infe┬Łrence, data ana┬Łly┬Łtics, sci┬Łen┬Łti┬Łfic com┬Łpu┬Łting, geno┬Łmics, edge video ana┬Łly┬Łtics, 5G ser┬Łvices, and more.

Fast-gro┬Łwing, cri┬Łti┬Łcal indus┬Łtries will be able to acce┬Łle┬Łra┬Łte their dis┬Łco┬Łveries with the breakth┬Łrough per┬Łfor┬Łmance of A100 on Goog┬Łle Com┬Łpu┬Łte Engi┬Łne. From sca┬Łling up AI trai┬Łning and sci┬Łen┬Łti┬Łfic com┬Łpu┬Łting, to sca┬Łling out infe┬Łrence appli┬Łca┬Łti┬Łons, to enab┬Łling real-time con┬Łver┬Łsa┬Łtio┬Łnal AI, A100 acce┬Łle┬Łra┬Łtes com┬Łplex and unpre┬Łdic┬Łta┬Łble workloads of all sizes run┬Łning in the cloud.

NVIDIA CUDA 11, coming to gene┬Łral avai┬Łla┬Łbi┬Łli┬Łty soon, makes acces┬Łsi┬Łble to deve┬Łlo┬Łpers the new capa┬Łbi┬Łli┬Łties of NVIDIA A100 GPUs, inclu┬Łding Ten┬Łsor Cores, mixed-pre┬Łcis┬Łi┬Łon modes, mul┬Łti-ins┬Łtance GPU, advan┬Łced memo┬Łry manage┬Łment and stan┬Łdard C++/Fortran par┬Łal┬Łlel lan┬Łguage constructs.

Breakthrough A100 Performance in the Cloud for Every Size Workload

The new A2 VM ins┬Łtances can deli┬Łver dif┬Łfe┬Łrent levels of per┬Łfor┬Łmance to effi┬Łci┬Łent┬Łly acce┬Łle┬Łra┬Łte workloads across CUDA-enab┬Łled machi┬Łne lear┬Łning trai┬Łning and infe┬Łrence, data ana┬Łly┬Łtics, as well as high per┬Łfor┬Łmance computing.

For lar┬Łge, deman┬Łding workloads, Goog┬Łle Com┬Łpu┬Łte Engi┬Łne offers cus┬Łto┬Łmers the a2-megag┬Łpu-16g ins┬Łtance, which comes with 16 A100 GPUs, offe┬Łring a total of 640GB of GPU memo┬Łry and 1.3TB of sys┬Łtem memo┬Łry ŌĆö all con┬Łnec┬Łted through NVS┬Łwitch with up to 9.6TB/s of aggre┬Łga┬Łte bandwidth.

For tho┬Łse with smal┬Łler workloads, Goog┬Łle Com┬Łpu┬Łte Engi┬Łne is also offe┬Łring A2 VMs in smal┬Łler con┬Łfi┬Łgu┬Łra┬Łti┬Łons to match spe┬Łci┬Łfic appli┬Łca┬Łti┬ŁonsŌĆÖ needs.

Goog┬Łle Cloud announ┬Łced that addi┬Łtio┬Łnal NVIDIA A100 sup┬Łport is coming soon to Goog┬Łle Kuber┬Łnetes Engi┬Łne, Cloud AI Plat┬Łform and other Goog┬Łle Cloud ser┬Łvices. For more infor┬Łma┬Łti┬Łon, inclu┬Łding tech┬Łni┬Łcal details on the new A2 VM fami┬Łly and how to sign up for access, visit the Goog┬Łle Cloud blog.